Members

Pablo Morales, Ph.D.

Chief Researcher

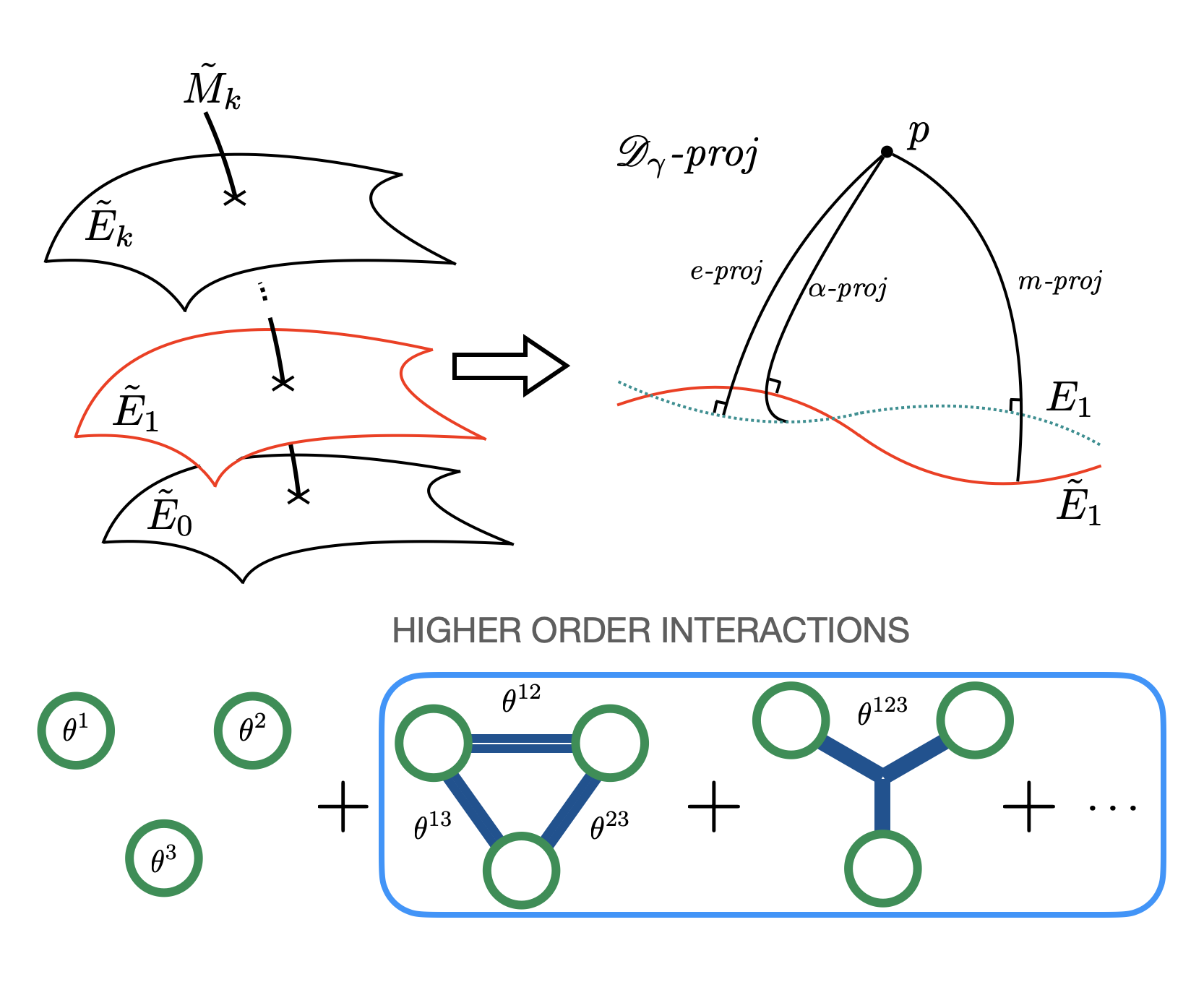

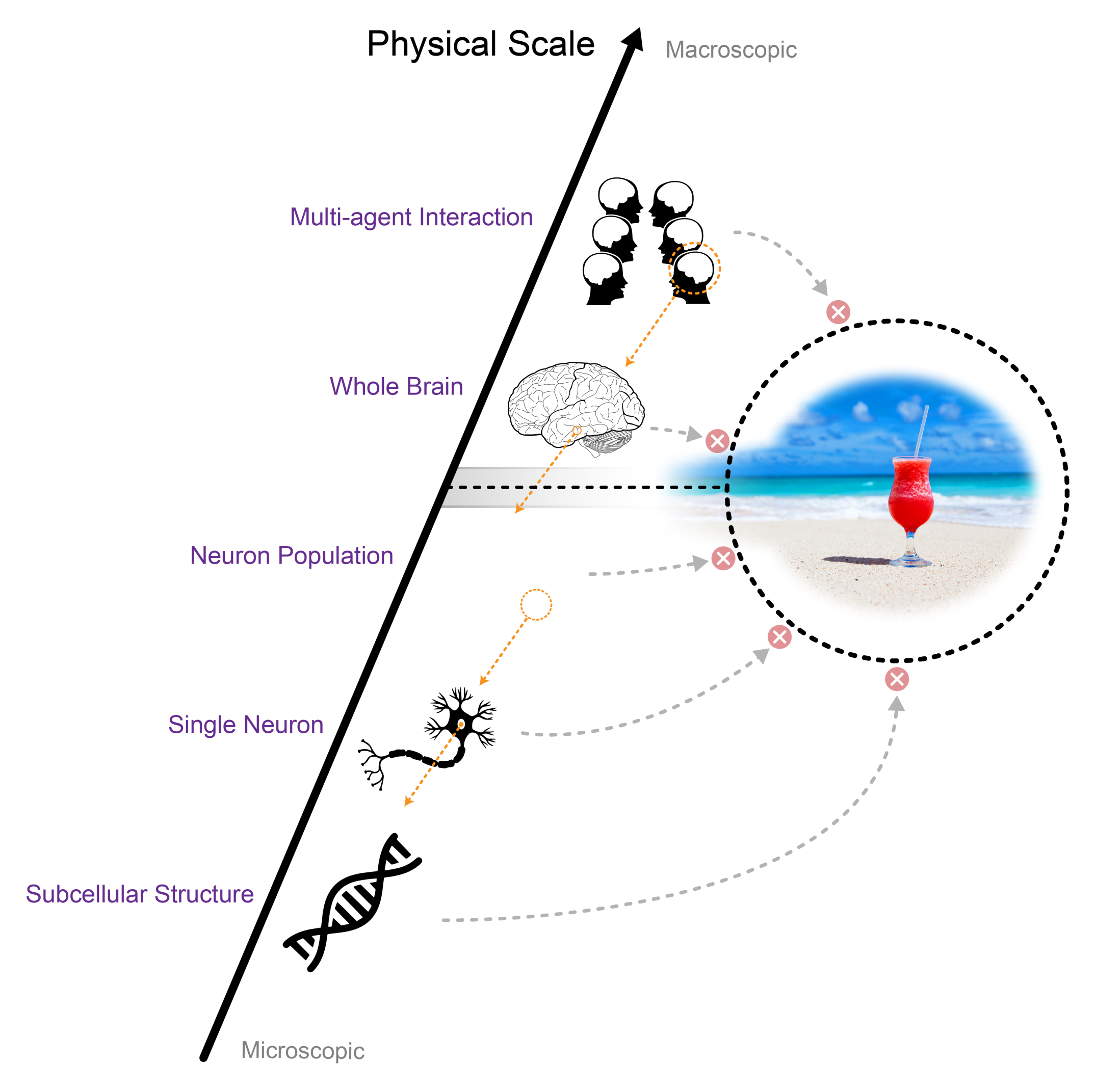

Pablo is a Chief Researcher at Araya. He received his Ph.D. in Theoretical Particle Physics at The University of Tokyo in 2018. He is a former Japan Society for the Promotion of Science (JSPS) fellow and MEXT scholar. His current research interests include information geometry and complex systems applied to theoretical neuroscience.

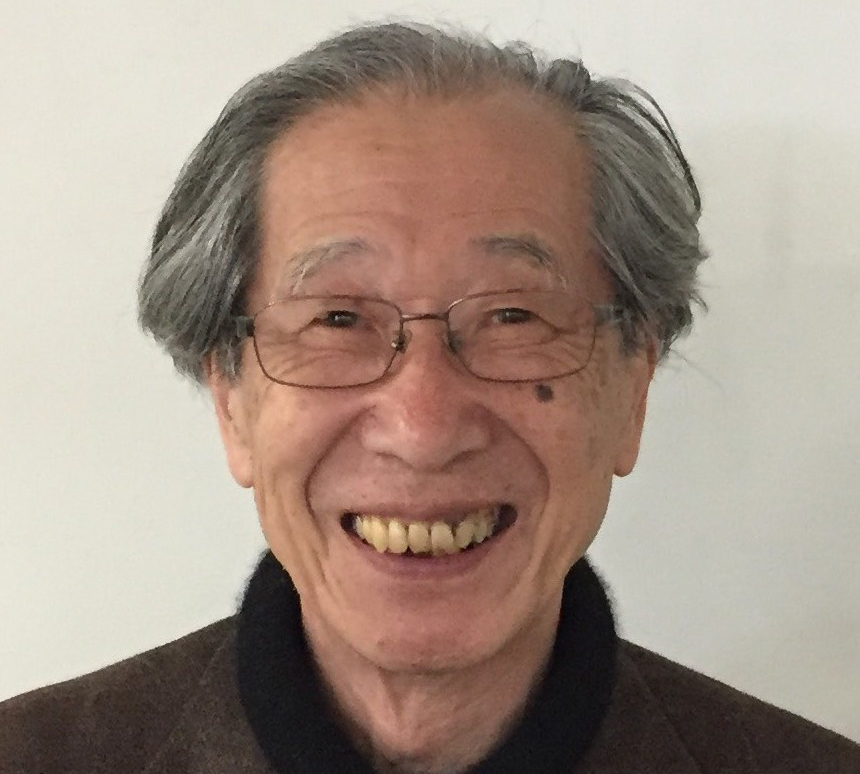

Shun-ichi Amari, Ph.D.

Research Advisor

Dr Shun-ichi Amari is a mathematical neuroscientist. He is a professor emeritus at the University of Tokyo and a honorary science advisor at RIKEN. He initiated information geometry, which has been widely applied in various fields such as statistics, signal processing, information theory, machine learning and more. He is also one of pioneers in developing mathematical theories of neural networks. He is currently working on integrated information theory and Wasserstein distance using information geometry.